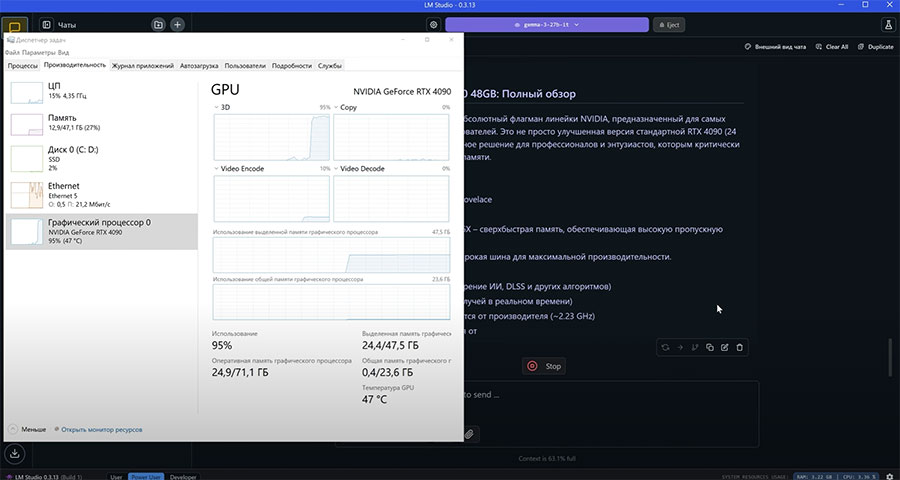

First Teardown: 48GB RTX 4090 Mod RUNS 70B LLMs Flawlessly

While the official GPU market often leaves high-VRAM enthusiasts wanting more without entering the pricey data center territory, the hardware modding scene in China continues to innovate. Reports and reviews, including a recent one from Russian tech channel МК, showcase a compelling modification: an NVIDIA GeForce RTX 4090 equipped with a staggering 48GB of GDDR6X memory, double the stock configuration. For the technically adept, price-conscious user focused on running increasingly large language models locally, this development warrants a closer look.

Technical Breakdown: How 48GB on an AD102 Becomes Reality

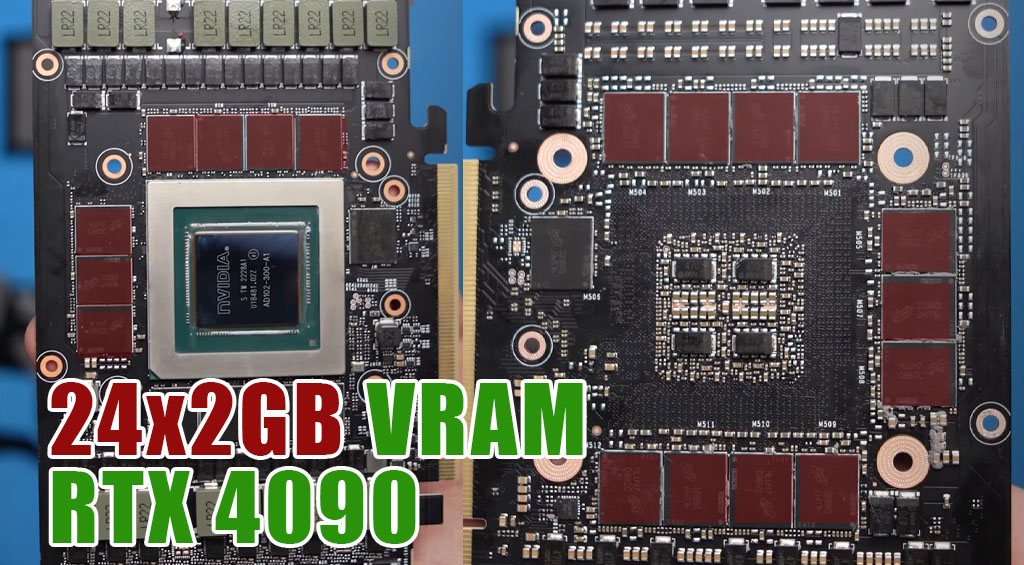

The standard RTX 4090 utilizes NVIDIA’s AD102 GPU, paired with 24GB of GDDR6X memory using 12x 2GB memory chips populated on one side of a relatively compact PCB. Achieving 48GB isn’t possible with denser, readily available GDDR6X chips. The modders cleverly circumvent this limitation by adopting a PCB design philosophy reminiscent of the older RTX 3090.

They employ a custom, longer PCB that features memory pads on both sides, allowing for the installation of 24x 2GB GDDR6X modules (12 front, 12 back). This effectively grafts the memory subsystem layout of a 3090 onto the modern AD102 core. The review notes the memory chips appear new and consistently dated, crucial for stability in such a configuration. The GPU chip itself, however, might be sourced from used cards (“slightly well done,” as the review puts it), a common practice in the refurb market but a point of consideration regarding longevity.

Crucially, these modifications seem to work seamlessly with standard NVIDIA drivers. This is likely thanks to leaked internal NVIDIA tools (like MATS and Mods) that allow modification of the video BIOS – including changing the reported memory capacity – without breaking the digital signatures NVIDIA’s drivers check for.

The reviewed card features a dual-slot, blower-style (turbine) cooler. While incredibly loud under load (hitting 65 dB in tests), this design is thermally effective (keeping the GPU around 70°C, hotspot 78°C, memory 86°C) and, importantly for some users, allows for denser multi-GPU configurations in server racks or modified desktop cases compared to typical triple or quad-slot gaming coolers. Power is delivered via the standard 16-pin 12VHPWR connector.

Performance Implications for Local LLMs

For LLM inference, the primary metrics are VRAM capacity and memory bandwidth.

- VRAM Capacity: The 48GB VRAM is the headline feature. Referring to our VRAM requirement table, this single card can comfortably house models like:

- 32B_q4_0 (19.1 GB)

- 65B_q4_0 (36.8 GB)

- 70B_q4_0 (38.9 GB)

- 72B_q4_0 (37.42 GB)

- Memory Bandwidth: As the card retains the RTX 4090’s 384-bit bus and GDDR6X memory clocked similarly, it boasts the same impressive ~1 TB/s memory bandwidth. This is a significant advantage over popular budget multi-GPU options using older, slower cards like the Tesla P40 (340 GB/s) or even newer low-power options like the L4 (300 GB/s). It also surpasses the Tesla V100 (900 GB/s) and matches second-hand data center options like the A40/L40 (which also offer 48GB VRAM but often command high prices and require passive cooling solutions).

Comparative Landscape: Where Does the Modded 4090 Fit?

For the enthusiast targeting large models, the hardware options typically involve trade-offs:

- vs. Single Stock RTX 4090 (24GB) / Used RTX 3090 (24GB): The modded card doubles the VRAM, eliminating the need for model splitting or heavy offloading for models in the ~30-70B (q4) range. Performance-per-watt and raw speed remain similar to a stock 4090, far exceeding the 3090.

- vs. Multi-GPU (e.g., 2x RTX 3090, 2x Tesla P40): Two 3090s provide 48GB total VRAM. However, this increases system complexity, power draw, and cost. Two P40s offer 48GB at a very low entry cost but suffer significantly from slow bandwidth, bottlenecking inference speed. The modded 4090 offers single-card simplicity with high bandwidth. The blower cooler also makes it physically easier to stack than two gaming 3090s/4090s.

- vs. Used Data Center GPUs (L40, A40 – 48GB; V100 – 32GB): The L40/A40 match the VRAM and offer official support but are passively cooled, requiring significant user effort for cooling in desktop/non-server environments. Their bandwidth (~900-1000 GB/s) is comparable. Price is a major factor; used L40/A40s can be very expensive, potentially exceeding the modded 4090’s cost. The V100 offers less VRAM (32GB) and requires similar cooling efforts.

- vs. Apple Silicon (M-series Ultra): High-end Mac Studios (M2/M3 Ultra) with 128GB+ unified memory offer massive capacity and good bandwidth (800 GB/s) in an integrated package, capable of running extremely large models (>100B parameters). However, the cost is substantial, and the ecosystem is less flexible for hardware tinkerers. The modded 4090 represents a high-performance discrete GPU path on the PC side, bridging the gap between consumer 24GB cards and the vast, expensive unified memory of top-tier Macs or complex multi-GPU PC builds needed for >100B models.

Value Proposition and Caveats

Pros:

- Massive 48GB VRAM on a single card.

- Excellent ~1 TB/s memory bandwidth.

- Core performance of an RTX 4090.

- Blower cooler suitable for multi-GPU density.

- Works with standard drivers.

Cons:

- High cost.

- Likely uses a refurbished/used GPU core.

- No official warranty or support.

- Extreme noise under load.

- Potential variability in modding quality.

Conclusion

This modded 48GB RTX 4090 isn’t for everyone. Casual users or gamers gain little, as demonstrated by the gaming benchmarks in the review (most games don’t exceed 24GB). However, for the dedicated LLM enthusiast who needs more than 24GB or 32GB VRAM now, wants high memory bandwidth, prefers a single-card solution over multi-GPU complexity, and is willing to accept the risks and costs associated with unofficial modifications, this card presents a fascinating, albeit niche, option.

We’ll be keeping an eye on this space. Have you encountered these cards? What’s your strategy for tackling >24GB model requirements? Let us know in the comments!