Will the New DDR5-9000 and DDR5-8000 Memory Unlock Faster Local LLM Performance?

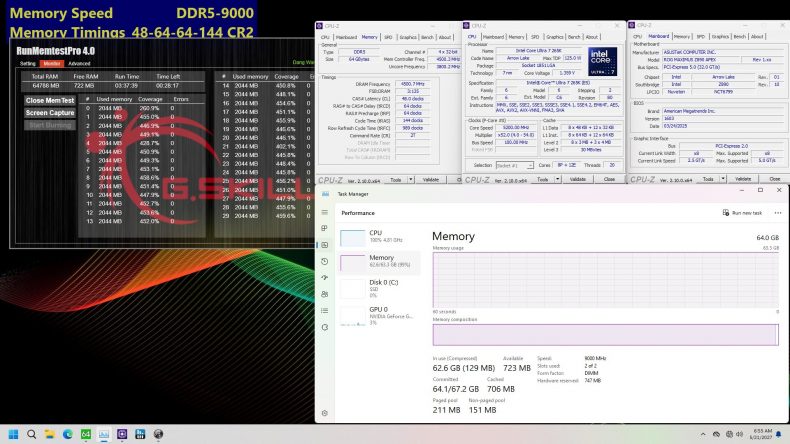

G.Skill just dropped a major announcement that should catch the eye of every LLM tinkerer and local inference enthusiast: two new high-end DDR5 kits, one at DDR5-8000 with 128 GB capacity (2x64GB), and another at a blistering DDR5-9000 with 64 GB capacity (2x32GB). These memory kits mark a new milestone in consumer-grade DRAM, but the real question for us is: what do they actually mean for local LLM inference?

Let’s break it down.

The Name of the Game Is Bandwidth

When it comes to large language model inference — especially running multi-billion parameter models locally with acceptable speed — memory bandwidth is king. Quantized LLMs like 70B_q4_0 or even 110B_q4_0 rely heavily on feeding your CPU or GPU fast enough. This makes RAM speeds almost as critical as capacity when you’re offloading weights to system memory.

Here’s how the new G.Skill kits stack up in terms of theoretical dual-channel memory bandwidth:

| Kit | Capacity | Bandwidth |

|---|---|---|

| DDR5-8000 | 128GB | 128 GB/s |

| DDR5-9000 | 64GB | 144 GB/s |

For comparison:

| Platform | Bandwidth |

|---|---|

| NVIDIA DGX Spark | 273 GB/s |

| Ryzen AI MAX+ 395 | 256 GB/s |

| Apple M3/M4 Max | 410 GB/s |

| RTX 4090 | 1.01 TB/s |

| RTX 5090 | 1.79 TB/s |

When looking at the G.Skill DDR5-8000 and DDR5-9000 kits, 144 GB/s of bandwidth from the DDR5-9000 kit might seem modest compared to other leading platforms. For reference, the NVIDIA DGX Spark delivers 273 GB/s, and the Ryzen AI MAX+ 395 offers 256 GB/s. Apple’s M3/M4 Max pushes the envelope with 410 GB/s of bandwidth, while the RTX 4090 offers an impressive 1.01 TB/s. The RTX 5090 takes things even further, with 1.79 TB/s of memory bandwidth.

Compared to other platforms, 144 GB/s is modest, especially when stacked against modern LLM solutions. But these new kits are aimed squarely at the DIY desktop segment — the kind of builds where maximizing local capacity and flexibility matters. For users running CPU-based inference or hybrid setups that offload model layers between GPU and system RAM, this level of bandwidth remains somewhat relevant.

Which Kit Is Right for You?

DDR5-9000 – 64 GB / 144 GB/s

This is the higher-bandwidth kit — and at 144 GB/s, it offers the fastest system memory access available to consumers at this capacity. While 64 GB might seem limiting, it’s actually a sweet spot for running 70B models (like LLaMA 2 70B_q4_0) with extended context sizes — think 32k+ tokens.

This makes it ideal for enthusiasts focused on inference quality, chat history length.

DDR5-8000 – 128 GB / 128 GB/s

Slower than the 9000 MT/s kit, but it doubles your total capacity. That’s a big deal.

128 GB of system memory in a dual-DIMM setup opens the door to running 110B and 140B models (like Mixtral or Qwen) in a single-node, single-box config — no distributed setup needed.

Yes, the bandwidth is slightly lower at 128 GB/s, but you’re getting a lot more model in the box.

Where These Kits Might Fit in a Local LLM Inference Build

While these kits aren’t explicitly targeted at LLM workloads, enthusiasts building local inference systems may still find value here. In desktop PC setups that pair discrete GPUs with large pools of system RAM, high-capacity, high-speed DDR5 can play a role — particularly when offloading model layers to memory in order to accommodate 70B+ models. For users exploring non-traditional memory hierarchies to push beyond VRAM limits, this offers another potential path worth watching.

Final Thoughts

We’ll have to wait for real-world benchmarks and availability — G.Skill hasn’t confirmed pricing or ship dates yet (expected May 2025), and kits like this will not be cheap. But for LLM enthusiasts chasing the dream of running model on a single workstation, these are the kits to watch.

These releases mark an important step in expanding memory options for local LLM inference. For builders looking to push beyond VRAM limits without moving to server-grade hardware, high-speed, high-capacity DDR5 opens up new possibilities. Ultimately, the real value will depend on price-per-GB and how these kits perform under memory-bound inference workloads in real-world testing.