How Fast Can You Run DeepSeek V3 LLM Model with Dual EPYC Processors and 768GB DDR5 at 24 Channels?

Local LLM inference is advancing rapidly, and for enthusiasts willing to push the limits, AMD’s EPYC platform is proving to be a compelling option. A recent test of DeepSeek V3 (671B parameters, 37B active MoE) on a dual-EPYC setup with 768GB DDR5-5600MHz memory reveals interesting performance insights. We’ll break down the results, compare them to alternatives, and analyze whether this setup is worth the investment.

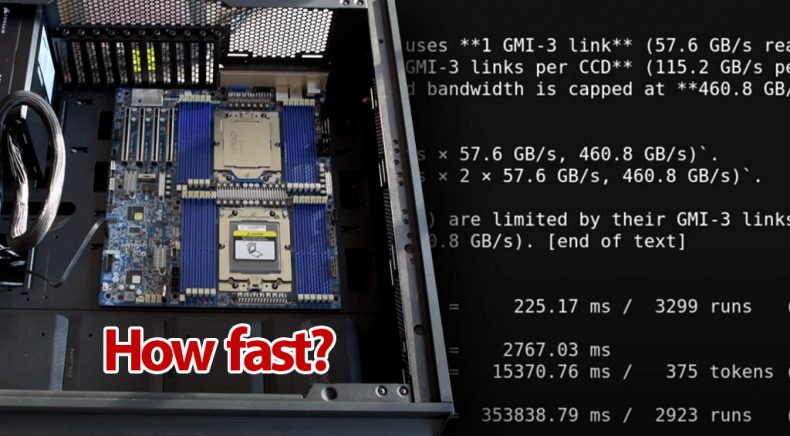

The Test Bench: Hardware Overview

- Motherboard: Gigabyte MZ73-LM0 or MZ73-LM1 (dual SP5 sockets, 24 DDR5 channels)

- CPUs: 2x AMD EPYC 9004/9005 series

- Memory: 24x 32GB DDR5-5600 RDIMMs (768GB total)

- Cooling: Arctic Freezer 4U-SP5

- Power Supply: Corsair HX1000i

- Storage: 1TB NVMe SSD

This setup has a cost between $6,000 – $14,000, depending on sourcing and component availability.

Performance Benchmarks: DeepSeek V3 Inference

DeepSeek V3 is a MoE (Mixture of Experts) model, meaning that only 37 billion parameters are active at any time, allowing for significantly faster performance than a dense 671B model.

For Q8 quantization, which maintains full precision, model loading takes approximately four minutes. In short-context scenarios, generation speed reaches 6-7 tokens per second, while prompt processing achieves 24 tokens per second.

The Q4 quantization significantly reduces model loading time to around two minutes. With a 1500-token context, generation runs at 8-9 tokens per second, and prompt processing reaches 40 tokens per second. At a 2000-token context, generation slows slightly to 7-8 tokens per second, while prompt processing is 35 tokens per second. This means that 2000 tokens prompt takes more then a minute to even process.

Memory Bandwidth Considerations

With 24 memory channels and DDR5-5600, total bandwidth reaches around 660 GB/s, ensuring rapid data movement and reducing latency bottlenecks. However, at 20K+ token contexts, inference starts slowing down dramatically, making this setup suboptimal for extended coding sessions but well-suited for short conversations and general LLM interaction.

Comparison: Dual EPYC vs. Mac Studio M2 Ultra (512GB Unified Memory)

How does this setup compare to Apple’s Mac Studio M3 Ultra inference speed, which has a 512GB Unified Memory architecture? Let’s break it down:

| Context Size | Prompt Processing Speed | Generation Speed |

|---|---|---|

| 69 tokens | 58.08 tokens/sec (1.19s) | 21.05 tokens/sec |

| 1145 tokens | 82.48 tokens/sec (13.89s) | 17.81 tokens/sec |

| 15777 tokens | 69.45 tokens/sec (227s) | 5.79 tokens/sec |

Compared to the Dual EPYC + 768GB DDR5 setup, the Mac Ultra has better speed.

Cost-Performance Analysis: Is It Worth It?

The biggest factor here is cost. If sourced carefully this Dual EPYC build can be an alternative to M3 Ultra for running huge MoE LLMs. However, users must balance the trade-offs:

Pros:

- Massive memory capacity and bandwidth (24-channel DDR5)

- Upgradability

- Lower cost vs. equivalent GPU and Apple M3 Ultra alternatives

- Power consumption (~400W)

Cons:

- Prompt processing slows down above 2K tokens (not ideal for extended context applications like programming)

- Initial setup complexity (server hardware)

Final Verdict: Who Should Build This?

If your workload is local LLM inference with short to moderate context lengths (2K-16K tokens), the Dual EPYC 768GB build is a powerful option. However, for extreme long-context applications (20K+ tokens), further RAM expansion or alternative architectures may be required.

For those prioritizing raw performance-per-dollar and DIY scalability, this setup is an exciting alternative to high-end consumer or workstation GPUs. Just be prepared for some hardware tinkering along the way!