Pre-built desktop workstation PCs for LLM: Model Suggestions

As the field of Large Language Models (LLMs) continues to expand, hardware demands are increasing, especially for users wanting to run models in a more secure and private environment. The choice between building a custom PC and opting for a pre-built workstation plays a significant role in optimizing performance and efficiency in LLM workflows. This analysis focuses on pre-built desktop and workstation PCs tailored for LLMs, highlighting key performance factors such as GPU memory, model size, and quantization, along with suggestions based on model size and task complexity.

Build vs. Pre-built

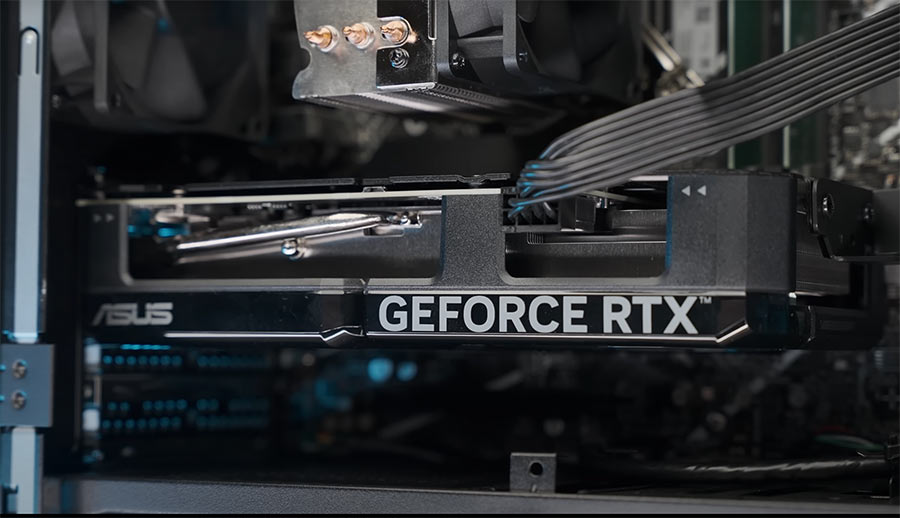

When selecting a machine for LLM tasks, the choice between building your own system or choosing a pre-built one often boils down to customization versus convenience. Building your own system offers the flexibility to tailor each component—such as GPUs, storage, and cooling—to the specific needs of LLM workloads. Enthusiasts comfortable with assembling hardware can leverage this approach to fine-tune their systems, often achieving superior performance-per-dollar ratios.

However, for those prioritizing convenience, pre-built workstations offer several advantages. They provide out-of-the-box reliability and come pre-configured for high-performance tasks from OEMs like Dell or HP, or from system integrators (SIs) that use standard, non-proprietary components. While OEM systems may come with proprietary parts that limit upgradability, SIs generally allow more flexibility for future upgrades, which can be vital for evolving LLM workloads.

Model Size, VRAM, and Quantization

The size of an LLM, measured in billions of parameters, is a factor in determining the required computational power. As model size increases—from smaller 8 billion parameter models to massive 400+ billion models—the hardware requirements, particularly GPU memory (VRAM), scale dramatically. For instance, small models (8B–14B) may run efficiently on consumer-grade GPUs, whereas models exceeding 70 billion parameters necessitate multi-GPU setups with professional-grade components.

This is where quantization techniques, especially 4-bit quantization, come into play. Quantization reduces the precision of the model weights, which significantly lowers the memory footprint while maintaining reasonable performance. In practical terms, a 4-bit quantization scheme enables larger models to be run on GPUs with lower memory capacities.

| Model name | Parameter Count | Model Quantization | VRAM required (GB) |

|---|---|---|---|

| 7B_q4_0 | 7B | 4-bit | 5.5 |

| 13B_q4_0 | 13B | 4-bit | 7.8 |

| 34B_q4_0 | 30B | 4-bit | 19.1 |

| 8x7B_q5_0 | 8x7B (46B) | 5-bit | 32.2 |

| 65B_q4_0 | 65B | 4-bit | 36.8 |

| 70B_q4_0 | 70B | 4-bit | 38.9 |

| 120B_Q4 | 120B | 4-bit | 66 |

Pre-built Computer for Small LLMs (8B to 14B)

For users working with smaller LLMs such as LLaMA 3.1 8B, Phi 3 14B, DeepSeek Coder v2 Lite, or Qwen2.5 14B, the memory requirements after 4-bit quantization range between 5.5 GB and 10.2 GB of VRAM. Given these specifications, mid-range GPUs like the NVIDIA RTX 4060 Ti 12GB or the RTX 4070 12GB offer sufficient headroom for most tasks, providing a balance between affordability and performance.

For these workloads, pre-built systems configured with these GPUs provide enough power to handle models up to 14 billion parameters effectively, especially when combined with adequate CPU and storage.

ROG Strix G13CHR G13CHR-71470F001XS

The Asus G13CHR represents a notable improvement over previous OEM pre-built gaming systems, addressing some common shortcomings in this market. This model features better ventilation, with functional airflow design on both the front and top panels, and the inclusion of dual-channel RAM by default, which boosts performance. Inside, it’s powered by an Intel i7-14700F CPU paired with an RX 4060 GPU, a decent combo for most modern games. However, the i7’s reputation for power draw spikes and potential stability issues may raise concerns, though Asus does offer a firmware update to mitigate these risks. The system handles LLMs like Llama 3.1 8B and Qwen 2.5 14B efficiently, staying cool and relatively quiet.

The G13CHR offers solid LLM and gaming performance with improved thermals and quieter operation, making it a strong contender in the mainstream pre-built market.

Pre-built Computer for Medium LLMs (16B to 34B)

For medium-sized models like LLaMA 30B, Qwen2.5 32B Instruct, Mistral Small 22B, and DeepSeek Coder 33B, the memory demand after quantization increases substantially, with VRAM requirements in the 19 to 22 GB range. To accommodate these models, users need to look toward more powerful GPUs like the RTX 4090, RTX 3090, or workstation-class cards such as the NVIDIA A5000 or RTX 5000 Ada.

In this range, pre-built systems configured with either of these GPUs provide a good balance of VRAM and processing power, allowing for real-time inference and training workloads that scale with the model’s complexity.

Main Gear MG-1

The Main Gear MG-1 Desktop PC is built for running large language models (LLMs) like DeepSeek 33B parameters. It features an Intel Core i5-14400F processor, providing solid multi-threaded performance for model inference tasks.

The system includes an MSI Pro B760-VC WiFi HS motherboard paired with 32GB of T-Force Delta RGB DDR4 RAM, essential for managing LLM workloads. For graphics, it’s powered by an NVIDIA GeForce RTX 4090 with 24GB of VRAM, ensuring efficient handling of large models.

The 1TB T-Force A440 NVMe SSD provides high-speed data access, while the 850W FSP Hydro G Pro power supply ensures stable power for all components. Designed to efficiently run large-scale LLMs.

Pre-built Computer for Large LLMs (70B to 72B)

At the upper end of the LLM spectrum, open-weight models, such as LLaMA 3.1 70B, Qwen2.5 72B and Mixtral 8x7B, begin to approach the quality of their proprietary counterparts in terms of answer accuracy. These models require 40 to 46 GB of VRAM, even with 4-bit quantization, making them highly demanding in terms of hardware resources.

These models demand high-performance, multi-GPU systems like RTX 3090 and RTX 4090 or professional-grade cards like NVIDIA RTX 6000 Ada or A6000, which offer 48 GB of VRAM each.

Dual GPU Pro Workstation – Focus G

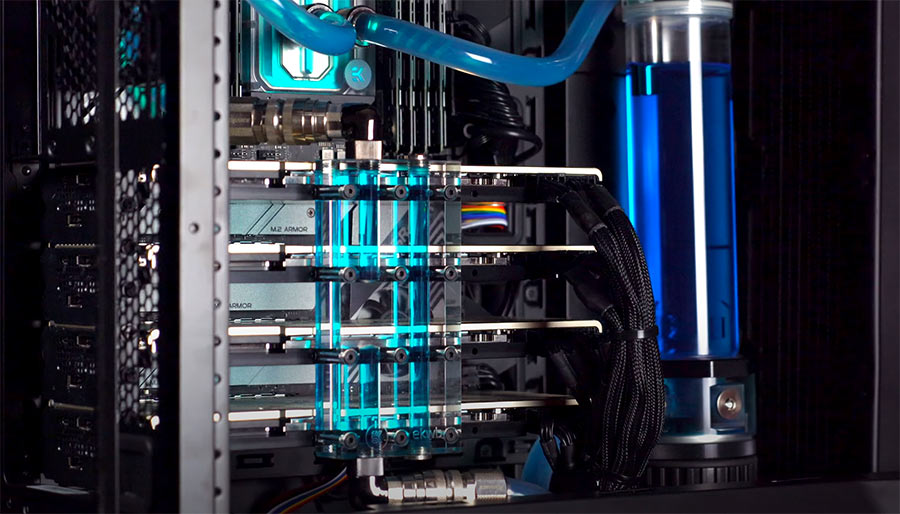

Designed to meet the high demands of professionals in fields such as 3D rendering, AI deep learning, and data science, Dual GPUs Focus G provide the advantages of parallel processing and massive VRAM, facilitating work on large language models and computationally intensive tasks.

The workstation is fully upgradeable to high-end components, including an Intel Core i9 13900K, up to 128GB of DDR5 RAM, and dual RTX 4090 GPUs with a combined 48GB of VRAM (or NVIDIA RTX A6000 Ada with 96GB VRAM for professional-grade tasks). The system is equipped with 240mm water cooling to ensure optimal thermal performance during intensive workloads.

Key components include an MSI Meg Z690 Unify motherboard and a 13th Gen Intel Core i7 13700K processor featuring 16 cores for strong multi-threaded performance. The system comes with 64GB of DDR5 5600MT/s RAM (2x32GB), which is upgradeable, and a 1TB NVMe SSD for high-speed storage (read/write speeds of 1000MB/s or faster).

For power, the system relies on a 1200W 80+ Gold-rated PSU (such as the Enermax D.F. 2) to support the dual RTX 3090 GPUs with 24GB of VRAM each, delivering excellent performance for graphics-intensive applications and AI workloads.

The Digital Storm Velox PRO Desktop PC

https://www.digitalstorm.com/configurator.asp?id=4994521

The Digital Storm Velox PRO Desktop PC is optimized for running 70B and 72B parameter large language models (LLMs) with ease. It features an AMD Ryzen 5 7600X processor with 6 cores and a 5.3 GHz turbo boost, offering efficient multi-threading.

The ASUS Prime B650M-A AX motherboard supports 64GB of DDR5 5200MHz RAM. Graphics processing is powered by dual NVIDIA GeForce RTX 4090 GPUs, each with 24GB of VRAM, ensuring smooth performance for LLM inference.

Storage includes a 2TB Digital Storm M.2 NVMe SSD for fast access to data, and an 850W 80+ Gold-rated power supply ensures stable power for these high-performance components. Ideal for running large-scale LLMs efficiently.

Pre-built Computer for Very Large LLMs 100B+ and 72B 16K+ context

At the top end of the open weights LLMs, models with 100B+ parameters – such as Llama 3.1 405B and DeepSeek v2.5 – demand significant computational resources, even with 4-bit quantization. These models require up to 236 GB of VRAM, making them nearly impossible to run on standard desktop workstations. While these models approach the quality of proprietary systems like GPT-4, their hardware demands place them firmly in the realm of data centers.

For users aiming to run smaller models in this class, there are still options for high-performance desktop setups. Models like Mistral-Large-2407 (59.43 GB) and Command R+ 104B (59.43 GB) can be deployed using triple RTX 3090 or RTX 4090 GPUs, each with 24 GB of VRAM. Alternatively, professional-grade setups like dual NVIDIA RTX 6000 Ada or A6000 GPUs, offering 48 GB each, provide additional capacity for larger models.

For the more demanding 100B – 140B B models like DeepSeek v2.5 or Mixtral 8x22, running with 4-bit quantization on a desktop would require four 24 GB GPUs, which pushes the limits of most desktop configurations. As an alternative, users can opt for 3-bit quantization (IQ3_M), which reduces the model size further, allowing these large models to run on a triple GPU setup. However, this approach comes with trade-offs in terms of performance and accuracy, making it a viable option for those with more constrained hardware resources.

Bizon ZX6000 G2

https://bizon-tech.com/bizon-zx6000.html#2345:20147;2346:20411;2347:20175;2349:20221;2350:20429;2362:54195

Conclusion

Selecting the right pre-built workstation for LLM tasks requires careful consideration of model size, VRAM requirements, and the type of tasks—whether inference, fine-tuning, or training. With the advent of 4-bit quantization, even consumer-grade GPUs can handle models that would otherwise require professional-grade hardware. For smaller LLMs, mid-tier GPUs like the RTX 4060 Ti or 4070 are sufficient, while medium and large models necessitate high-end solutions like the RTX 4090, RTX 3090, or even dual-GPU setups.

Pre-built workstations provide a convenient entry point for users who want to focus on software without the complexity of building custom systems, making them a viable option for both developers and researchers working with Large Language Models at various scales.

Allan Witt

Allan Witt is Co-founder and editor in chief of Hardware-corner.net. Computers and the web have fascinated me since I was a child. In 2011 started training as an IT specialist in a medium-sized company and started a blog at the same time. I really enjoy blogging about tech. After successfully completing my training, I worked as a system administrator in the same company for two years. As a part-time job I started tinkering with pre-build PCs and building custom gaming rigs at local hardware shop. The desire to build PCs full-time grew stronger, and now this is my full time job.2 Comments

Submit a Comment

Related

Desktops

Best GPUs for 600W and 650W PSU

A high-quality 500W PSU is typically sufficient to power GPUs like the Nvidia GeForce RTX 370 Ti or RTX 4070.

Guides

Dell Outlet and Dell Refurbished Guide

For cheap refurbished desktops, laptops, and workstations made by Dell, you have the option…

Guides

Dell OptiPlex 3020 vs 7020 vs 9020

Differences between the Dell OptiPlex 3020, 7020 and 9020 desktops.

Interesting article, but what about budget-conscious users? Are there any pre-built options under $2,000 that can handle 34B model?

You’re right, finding capable systems within a budget is important. While most pre-builts equipped for 34B models are above $2,000, you might find good deals on refurbished or a second hand systems or consider building your own PC to have more control over costs. Currently best option is a secondhand RTX 3090 build. RTX 3090 is a beast and it is slightly slower then RTX 4090 in terms of inference speed. There are many similar offers on the market for around $1,500. Another, even cheaper option is to purchase a refurbished OEM workstation system with a good power supply (750W+), such as a Lenovo ThinkStation, Dell Precision, or HP Z1/Z4, and install a secondhand RTX 3090. With this approach, the cost can be as low as $1,000.