What Laptop to Choose for PyTorch Projects?

In the realm of deep learning, hardware choices play a pivotal role in determining the efficiency of your workflows. When it comes to PyTorch-based projects, selecting the right laptop can significantly accelerate performance, with potential gains of up to 10x. For researchers and developers where milliseconds matter, optimizing your setup can be the key to unlocking faster, more efficient model training and avoiding the slowdowns that hinder progress.

PyTorch, being a leading deep learning framework, demands a significant amount of computing power, and selecting the optimal laptop can be a daunting task. This guide aims to demystify the process, arming you with the knowledge to choose a machine that fuels, rather than hinders, your AI ambitions.

While the promise of on-the-go deep learning is alluring, running PyTorch on a laptop presents unique challenges. Balancing portability with performance, grappling with thermal throttling, and navigating the ever-evolving landscape of hardware options can feel overwhelming.

Fear not, this comprehensive guide is your roadmap to navigating these complexities and finding the perfect laptop to supercharge your PyTorch projects in 2024.

Understanding PyTorch and its Requirements

PyTorch, renowned for its dynamic computation graphs and Pythonic ease of use, has become a go-to framework for researchers and developers alike. From natural language processing to computer vision, its applications are vast and demanding.

However, this flexibility and power come at a cost. PyTorch, especially for tasks involving large datasets and complex models, is a resource-intensive beast. To tame this beast and unlock its full potential, you need a laptop that meets its specific hardware requirements.

The Hardware Trinity: GPU, CPU, and RAM

Three primary components form the holy trinity of deep learning hardware: the GPU, the CPU, and RAM.

The beating heart of deep learning, the GPU, excels at parallel processing, making it ideal for handling the massive matrix multiplications inherent in neural networks. A powerful dedicated GPU is non-negotiable for serious PyTorch work.

While the GPU handles the heavy lifting, the CPU acts as the orchestrator, managing data flow and executing non-computationally intensive tasks. A multi-core CPU with a high clock speed ensures your system runs smoothly even under heavy workloads.

Think of RAM as your laptop’s short-term memory. It stores the data your PyTorch model is actively using. Larger RAM capacity allows you to work with larger datasets and more complex models without encountering performance hiccups. When selecting the amount of system RAM for a PyTorch laptop workstation, a common rule of thumb is to aim for system memory that is at least double the VRAM. For instance, if you’re configuring a laptop with a 24GB GPU, it’s advisable, to opt for a model with 64GB of system RAM.

Top Laptops for PyTorch in 2024

Choosing the “best” is subjective and depends heavily on your individual needs and budget. However, certain laptops consistently stand out in the demanding field of deep learning. This section will provide a curated list of top contenders, complete with detailed specifications, pros and cons, and insights drawn from hands-on experience

Balancing Budget and Performance

Deep learning hardware doesn’t come cheap, but achieving a good balance between cost and performance is entirely possible.

- As the cornerstone of deep learning performance, investing in a capable GPU should be your priority. Consider the trade-off between the latest and greatest model and a previous-generation card that offers excellent value for money.

- Adequate RAM is crucial for smooth operation. 16GB is a reasonable minimum, while 32GB offers headroom for larger projects and future-proofs your investment.

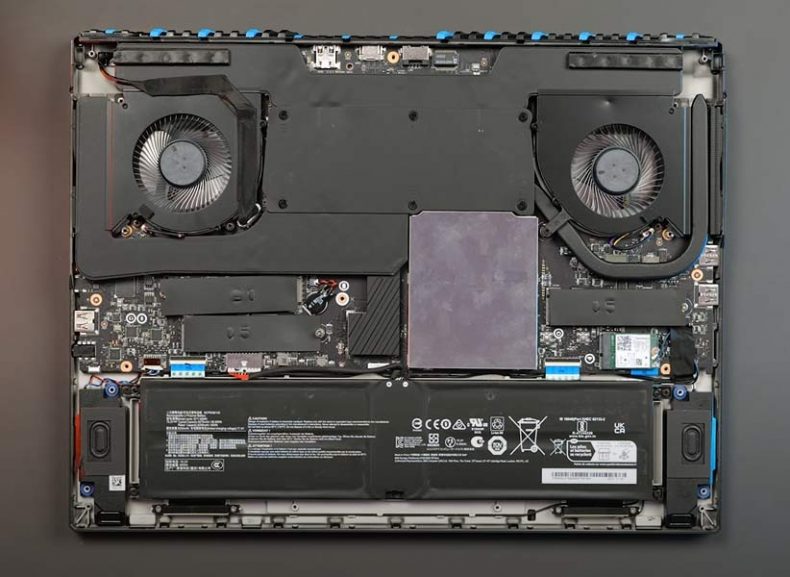

The MSI Titan 18 HX

The MSI Titan 18 HX is an extremely powerful, high-end laptop designed for users needing maximum performance, making it an excellent choice for running PyTorch or other demanding machine learning workloads. In our tests, the MSI Titan 18 HX, equipped with the RTX 4090, slashed training time on a ResNet-50 image classification task by 47% compared to its predecessor.

It features a 14th Gen Intel Core i9 HX processor, 128GB of memory, an RTX 4090 GPU (16GB VRAM), and a 4K 120Hz mini LED display, providing desktop-level performance in a laptop form factor.

Its large memory and storage capacity, coupled with robust cooling and multiple connectivity options, make it ideal for heavy data processing and deep learning tasks. However, its hefty price ($5,399) and bulky design make it suitable only for professionals who require this extreme level of power.

While alternatives like the Alienware m18 and Lenovo Legion Pro 7i Gen 8 offer more value for general high-end usage, the Titan 18 HX is unmatched in raw performance for specialized tasks.

The MSI CreatorPro 16 AI Studio

The MSI CreatorPro 16 AI Studio, powered by the Intel Core Ultra 9 185H and the NVIDIA RTX 5000 Ada (16GB VRAM) Generation GPU, is a top-tier laptop designed for professionals in AI development, machine learning, and data science. With 64GB of memory and a 2TB NVMe SSD, it provides ample power and storage for large datasets and complex tasks like PyTorch model training, inference, and deep learning workflows.

The 16-inch display delivers sharp visuals, crucial for professionals in AI research, 3D rendering, and content creation. Its professional-grade GPU ensures high-performance computing, optimized for AI-driven applications, while running on Windows 11 Pro enhances productivity and security.

“As a PhD student working on medical image analysis, the MSI CreatorPro 16 AI Studio has been a game-changer. The RTX 5000 Ada handles my massive datasets without breaking a sweat.“

Data science researcher

With a range of connectivity options and robust build quality, this laptop is a powerful, portable solution for those needing exceptional performance in demanding AI and machine learning tasks.

PyTorch: Straddling the CPU-GPU Divide

While PyTorch, at its core, remains framework-agnostic, there’s no denying its penchant for harnessing the raw computational muscle of GPUs. This predilection stems from the very nature of deep learning workloads, which often involve churning through massive datasets and performing billions, if not trillions, of matrix operations. GPUs, with their massively parallel architecture, are purpose-built for such tasks, offering significant performance gains over traditional CPUs.

However, this doesn’t relegate CPUs to the sidelines entirely. For smaller projects, prototyping, or scenarios where data transfer overhead outweighs the benefits of GPU acceleration, running PyTorch on a CPU remains a perfectly viable option.

Think of it this way: CPUs are like nimble Swiss Army knives, capable of handling a variety of general-purpose computing tasks with reasonable efficiency. GPUs, on the other hand, are specialized power tools, excelling at specific tasks like matrix multiplication – the bedrock of deep learning.

Therefore, the decision to leverage CPU or GPU acceleration boils down to a delicate balance of factors:

- Dataset Size: For smaller datasets, the performance difference might be negligible, making CPU a suitable choice. However, as your data balloons, the GPU’s parallel processing prowess becomes indispensable.

- Model Complexity: Simple models with fewer parameters might not saturate the CPU’s capabilities, while deeper, more intricate architectures crave the GPU’s computational throughput.

- Hardware Availability: Access to a dedicated GPU, especially a high-end one, can be a deciding factor. If your budget or infrastructure limits you to a CPU, PyTorch can still be utilized effectively, albeit potentially with longer training times.

Ultimately, PyTorch offers the flexibility to switch between CPU and GPU execution seamlessly. This allows users to experiment and profile their code to determine the optimal configuration for their specific needs. While PyTorch might have a soft spot for GPUs, it doesn’t shy away from leveraging the CPU’s strengths when the situation demands it.

The Role of the GPU in PyTorch

If the CPU is the meticulous conductor, the GPU is the thundering orchestra of deep learning performance. It’s the raw horsepower behind accelerating the training and inference of your models, crunching through massive matrix multiplications with unparalleled efficiency. While the CPU diligently handles orchestration and housekeeping tasks, the GPU is where the magic of parallel processing truly shines.

And in the realm of deep learning GPUs, one name reigns supreme: Nvidia. Their CUDA platform has become synonymous with AI acceleration, offering a level of hardware and software synergy unmatched by competitors.

But even within the green team’s expansive portfolio, performance can vary wildly. To illustrate these differences and help you navigate the GPU landscape, we’ve put together a benchmark suite showcasing the relative muscle of Nvidia’s top contenders across various PyTorch workloads.

| GPU Model | ResNet50 Throughput | Transformer-XL Base Throughput | Tacotron2 Throughput | BERT Base Squad Throughput |

|---|---|---|---|---|

| RTX 4090 | 721 | 22,750 | 32,910 | 161,590 |

| RTX 3090 | 513 | 12,101 | 25,350 | 97,404 |

| RTX 6000 Ada | 647 | 20,477 | 31,837 | 157,877 |

| RTX A6000 | 514 | 14,495 | 25,135 | 117,073 |

| RTX A4000 | 255 | 6,253 | 11,029 | 24,262 |

| RTX A4500 | 342 | 8,679 | 13,713 | 59,005 |

| Quadro RTX 8000 | 274 | 6,623 | 17,717 | 44,068 |

| RTX 3080 | 384 | 5,007 | 7,251 | 29,891 |

| RTX 3070 | 246 | 2,147 | 3,789 | N/A |

Is more CPU cores better for PyTorch

As evident from our testing, the raw throughput offered by each GPU can vary significantly, especially across different model architectures. While the flexes its muscles as the undisputed performance champion, the price-to-performance sweet spot might lie elsewhere depending on your specific needs and budget.

When considering PyTorch performance, especially with modern workloads, the difference between 6-core and 8-core processors can be significant, depending on how you’re utilizing your system.

If your setup heavily relies on a GPU for PyTorch tasks, the CPU’s role is still important but secondary. Here, an 8-core processor offers more headroom for parallel tasks, allowing for smoother multitasking and quicker data handling. While a 6-core CPU is certainly capable, the extra cores in an 8-core setup can reduce bottlenecks when managing large datasets or running multiple applications simultaneously, particularly during intensive deep learning workflows.

However, if you’re running PyTorch solely on a CPU, the core count becomes even more crucial. With CPU-only operations, PyTorch scales efficiently with more cores. An 8-core processor provides a noticeable boost in performance, handling larger models and datasets with greater ease. Training times will be shorter, and overall responsiveness will improve, making it a more suitable choice for demanding tasks. A 6-core processor, while competent, might start to feel the strain with more complex models, leading to longer processing times.

In essence, whether you’re leveraging a GPU or sticking to CPU-only operations, an 8-core processor will generally provide a more robust and future-proof solution for PyTorch workloads. It offers better multitasking and faster processing, allowing you to make the most of your deep learning projects without unnecessary slowdowns.

Laptops with Advanced Cooling for PyTorch

While strategic tweaks and external cooling solutions can help tame the thermal beast, hitting the thermal limit wall is an unfortunate reality for many deep learning practitioners. This is where hardware selection becomes paramount. Opting for a laptop engineered from the ground up for sustained performance under heavy workloads can mean the difference between smooth training sessions and frustrating thermal throttling.

As you can see the surface of the laptop can become quite hot when doing PyTorch training.

Look for machines that boast advanced cooling solutions, often featuring larger heat pipes, vapor chambers, and strategically designed vents that maximize airflow. These features might add a slight premium to the price tag, but consider it an investment in consistent performance and hardware longevity. After all, a laptop that throttles constantly isn’t just an inconvenience; it’s a bottleneck to your AI ambitions.

Fine-Tuning Your PyTorch Laptop: Squeezing Out Every Last Drop of Performance

Selecting the right hardware is only half the battle when it comes to running PyTorch effectively. Even the most powerful components can be held back by a poorly optimized software environment. Think of it like tuning a high-performance engine: you’ve got the horsepower, now you need to make sure everything is running smoothly for peak efficiency.

Update hardware drivers

First and foremost, driver updates are non-negotiable. It’s a seemingly obvious step, yet it trips up even seasoned users. Outdated drivers can lead to performance bottlenecks and compatibility issues, leaving valuable GPU cycles untapped. Keeping them current is akin to ensuring your hardware is speaking the latest and greatest software language.

How to avoid CPU bottlenecks

To optimize PyTorch training and avoid CPU bottlenecks, focus on efficient data loading by using formats like HDF5 or LMDB, and preprocess your data to reduce on-the-fly processing. Adjust the num_workers and prefetch_factor settings in your dataloader to keep the pipeline saturated, and consider using GPU-based augmentations with libraries like Kornia or Nvidia DALI to offload work from the CPU. Monitor system performance with profiling tools to identify and resolve bottlenecks, ensure fast storage, and fine-tune your Distributed Data Parallel (DDP) setup for optimal resource utilization.

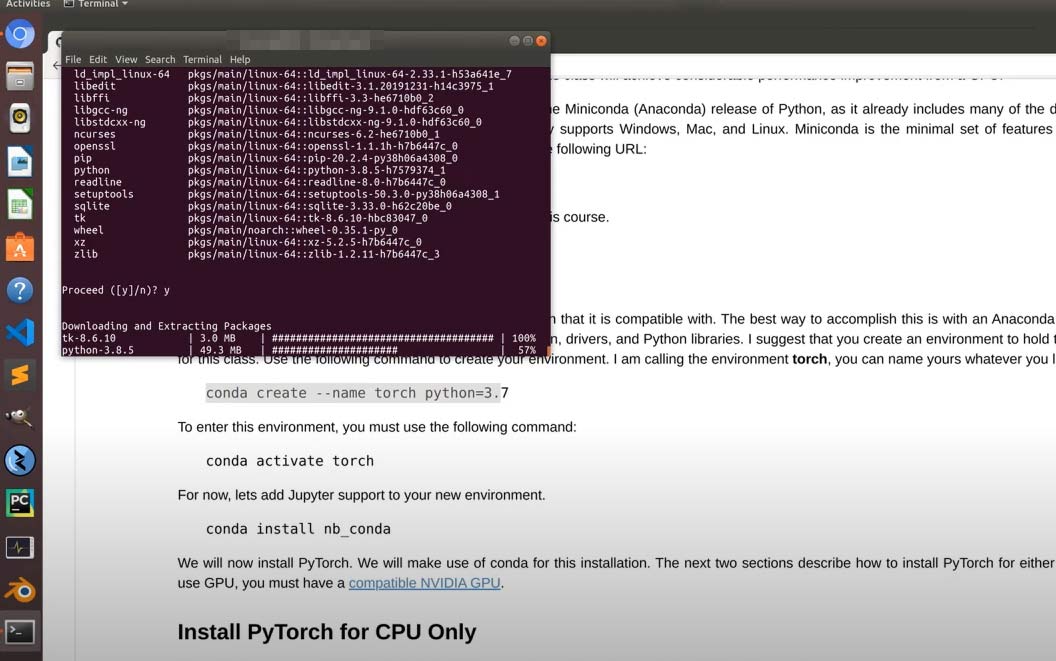

Use Linux

On the operating system front, lightweight Linux distributions can be a game-changer. Switching from a resource-intensive operating system to a leaner option like Ubuntu can free up valuable system resources, making them available for PyTorch instead of system overhead. The streamlined nature of Linux also fosters a more development-friendly environment, with a rich ecosystem of tools and libraries at your disposal.

Manage your OS resources

Resource management is another critical aspect of optimization. It’s all too easy to fall into the trap of having countless applications and browser tabs open, each nibbling away at precious RAM. Being mindful of what’s running in the background and closing unnecessary programs can make a substantial difference, particularly when working with large datasets that push your system’s memory limits.

Develop your project in virtual environment

Virtual environments are not optional. They’re indispensable tools for maintaining a clean and organized development environment. Failing to utilize them can lead to dependency conflicts and versioning nightmares – a recipe for frustration and wasted time. Think of virtual environments as isolated sandboxes for your projects, ensuring that each one has the specific packages and dependencies it needs without interfering with others.

Use Jupiter Notebook

Finally, for an interactive and iterative development experience, Jupyter Notebooks are invaluable. While traditional IDEs have their place, Jupyter’s real-time feedback and visualization capabilities are ideal for experimenting with models and seeing the impact of code changes instantly. It’s a more dynamic approach to development, allowing for rapid prototyping and refinement.

Optimizing your PyTorch environment is an ongoing process of refinement, but the results speak for themselves: a smoother, more responsive system that allows you to focus on pushing the boundaries of what’s possible with AI.

Conclusion

When it comes to selecting the best laptop for PyTorch, the stakes are high. The right hardware can dramatically accelerate your deep learning workflows, reduce training times, and streamline your overall productivity. It’s a balancing act between performance and budget, but getting it right is crucial for maximizing efficiency and minimizing frustration.

A powerful GPU is non-negotiable if you’re serious about deep learning. Ensuring your laptop is optimized for PyTorch isn’t just a good practice—it’s essential for getting the most out of your investment. This can be the difference between smooth model training and hours of wasted time.

We’ve all been there, navigating the challenges of hardware selection and optimization. Through trial and error, I’ve learned what works and what doesn’t, and I’m sharing this knowledge to help you avoid common pitfalls.

But the conversation doesn’t stop here. I’m eager to hear about your experiences—what laptops have served you well, and what optimization techniques have made a difference? Your insights are invaluable, so share your thoughts in the comments. Let’s continue to push the boundaries of what’s possible with PyTorch, together.

Allan Witt

Allan Witt is Co-founder and editor in chief of Hardware-corner.net. Computers and the web have fascinated me since I was a child. In 2011 started training as an IT specialist in a medium-sized company and started a blog at the same time. I really enjoy blogging about tech. After successfully completing my training, I worked as a system administrator in the same company for two years. As a part-time job I started tinkering with pre-build PCs and building custom gaming rigs at local hardware shop. The desire to build PCs full-time grew stronger, and now this is my full time job.Related

Desktops

Best GPUs for 600W and 650W PSU

A high-quality 500W PSU is typically sufficient to power GPUs like the Nvidia GeForce RTX 370 Ti or RTX 4070.

Guides

Dell Outlet and Dell Refurbished Guide

For cheap refurbished desktops, laptops, and workstations made by Dell, you have the option…

Guides

Dell OptiPlex 3020 vs 7020 vs 9020

Differences between the Dell OptiPlex 3020, 7020 and 9020 desktops.

0 Comments