NVIDIA RTX 4000 SFF Ada for Large Language Models

The NVIDIA RTX 4000 Small Form Factor (SFF) Ada GPU has emerged as a compelling option for those looking to run Large Language Models (LLMs), like Llama 3.2, Mistral and Qwen2.5, in compact and power-efficient systems. While it may not grab headlines like its consumer-oriented RTX 4090 sibling, this professional-grade card offers a unique blend of performance, efficiency, and features that make it particularly well-suited for LLM workloads.

Specifications and Design

The RTX 4000 SFF Ada is built on NVIDIA’s latest Ada Lovelace architecture, offering:

- 6,144 CUDA cores

- 192 Tensor cores

- 48 RT cores

- 20GB GDDR6 memory with ECC

- 280 GB/s memory bandwidth

- 70W TDP

- PCIe 4.0 x16 interface

- Compact dual-slot design

The card’s 20GB of VRAM is a standout feature, providing enough memory for running moderately sized quantized LLMs. This generous VRAM allocation, combined with the Ada architecture’s improved efficiency, positions the 4000 SFF ADA as an intriguing option for working with open weight language models.

LLM Performance

In our benchmarks focused on LLM inference, the RTX 4000 SFF Ada demonstrates impressive capabilities, especially considering its compact form factor and low power consumption:

| GPU | 8B Q4_K_M | 8B F16 | 32B IQ4_XS |

|---|---|---|---|

| RTX 4000 SFF Ada 20GB | 58.59 t/s | 20.85 t/s | 16.43 t/s |

| RTX 3090 24GB | 111.74 t/s | 46.51 t/s | 37.23 t/s |

| RTX 4090 24GB | 127.74 t/s | 54.34 t/s | 44.67 t/s |

| Model | Llama 3.1 8B | Llama 3.1 8B | Qwen2.5 32B |

For context, these results show the 4000 SFF Ada achieving about 46-52% of the performance of an RTX 3090 across various model sizes and quantization levels. This is remarkable considering the 4000’s significantly lower power envelope and smaller physical footprint.

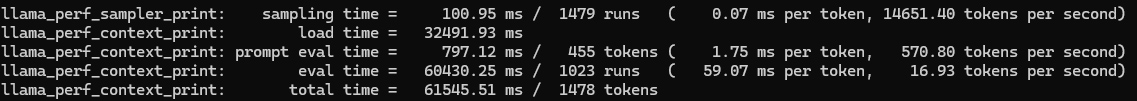

llama.cpp running Qwen2.5 32B IQ4_XS on RTX 4000 SFF Ada

The 32B IQ4_XS benchmark, utilizing the Qwen2.5-32B-Instruct-IQ4_XS model (currently one of the best open-weights LLM), showcases the 4000’s ability to handle larger, more complex models while still maintaining respectable performance. At 16.93 tokens/second, it offers a fluid experience for many LLM based applications.

Real world application

Instead of just throwing benchmarks around, we wanted to see the NVIDIA RTX 4000 SFF Ada in action. So, we built a compact, completely private LLM inference server powered by this card and an OptiPlex small form factor PC. Our goal: Alexa-like responses, but without the “Alexa” part (no cloud, all local processing). A Willow device (ESP32-S3-BOX) captures your voice, the RTX 4000 SFF Ada running Llama 3.2 8B does the thinking, and a speaker delivers the answers.

OptiPlex SFF Desktop with RTX 4000 SFF Ada

Willow ESP32-S3-BOX device

The result? Snappy performance, surprisingly natural conversations, and all within a system that fits comfortably on a bookshelf. Who needs bulky servers?

Limitations

While the NVIDIA RTX 4000 SFF Ada excels as a compact and power-efficient solution for running moderately sized LLMs, it’s essential to acknowledge its limitations, especially when compared to the reigning champion of locally hosted LLMs, the GeForce RTX 3090.

The 4000’s lower memory bandwidth (280 GB/s vs. the 3090’s 936 GB/s) will generate 50% less tokens per second. Furthermore, the 4000 currently commands a price premium in the used market, hovering around $1100, while a used RTX 3090 can be found for roughly $650. Heck, you could even snag a pair of used RTX 4060 Ti 16GB (32GB total) cards and have yourself a pretty potent LLM setup for under $800.

This price difference, coupled with the 3090’s raw performance advantage, makes the consumer-grade card a compelling alternative, provided its power consumption and physical size are manageable within the user’s deployment scenario. For those prioritizing frugality and top-end performance above all else, the 3090 remains a strong contender, even against newer professional-grade offerings.

Power Efficiency and Thermal Performance

One of the most impressive aspects of the RTX 4000 SFF Ada is its power efficiency. Operating within a 70W TDP, it delivers substantial LLM performance while consuming only a fraction of the power required by high-end consumer GPUs. This efficiency translates to lower operating costs and reduced cooling requirements, making it an excellent choice for 24/7 operation or deployment in space-constrained environments.

Thermal performance is equally noteworthy, with the card maintaining temperatures around or below 70°C under sustained load. The well-designed cooling solution allows for consistent performance without thermal throttling, even in compact systems with limited airflow.

Professional Features

As a member of NVIDIA’s professional GPU lineup, the RTX 4000 SFF Ada includes several features that set it apart from consumer cards:

- Error-Correcting Code (ECC) memory for enhanced reliability

- Optimized drivers for professional applications

- Support for NVIDIA GPU Direct technology

- Four Mini DisplayPort 1.4 outputs

These professional-grade features, while not directly impacting LLM performance, contribute to the overall stability and versatility of systems built around the 4000.

Use Cases and Positioning

The RTX 4000 SFF Ada excels in scenarios where power efficiency, compact size, and moderate LLM performance are prioritized. It’s particularly well-suited for:

- Edge AI deployments requiring local LLM inference

- Compact workstations for AI researchers and developers

- Home lab setups for experimenting with AI and machine learning

- Small-scale production environments running multiple AI services

While it may not match the raw performance of high-end consumer GPUs like the RTX 4090, the 4000 SFF Ada offers a balanced approach that makes it the most powerful SFF GPU option for LLM workloads, especially when considering its power efficiency and form factor.

Conclusion

The NVIDIA RTX 4000 SFF Ada represents a significant step forward in bringing powerful LLM capabilities to compact, energy-efficient systems. Its ability to run moderately sized language models at respectable speeds, all while consuming just 70W of power, makes it a standout option in the professional GPU market.

For users prioritizing a balance of performance, efficiency, and size in their LLM workflows, the RTX 4000 SFF Ada emerges as a top choice. It may not be the fastest option available, but its unique combination of features and capabilities makes it arguably the most well-rounded SFF GPU for LLM applications currently on the market.

As the field of AI and machine learning continues to evolve, GPUs like the 4000 SFF Ada demonstrate that impressive AI performance need not be limited to power-hungry, oversized cards. For many users, this compact powerhouse may well represent the sweet spot in the current landscape of AI-focused GPUs.

Allan Witt

Allan Witt is Co-founder and editor in chief of Hardware-corner.net. Computers and the web have fascinated me since I was a child. In 2011 started training as an IT specialist in a medium-sized company and started a blog at the same time. I really enjoy blogging about tech. After successfully completing my training, I worked as a system administrator in the same company for two years. As a part-time job I started tinkering with pre-build PCs and building custom gaming rigs at local hardware shop. The desire to build PCs full-time grew stronger, and now this is my full time job.7 Comments

Submit a Comment

Related

Desktops

Best GPUs for 600W and 650W PSU

A high-quality 500W PSU is typically sufficient to power GPUs like the Nvidia GeForce RTX 370 Ti or RTX 4070.

Guides

Dell Outlet and Dell Refurbished Guide

For cheap refurbished desktops, laptops, and workstations made by Dell, you have the option…

Guides

Dell OptiPlex 3020 vs 7020 vs 9020

Differences between the Dell OptiPlex 3020, 7020 and 9020 desktops.

Great overview! Have you compared the 4000 SFF Ada’s performance to the RTX A4500? It seems like another contender.

You’re absolutely right. The RTX A4500 is a strong contender.

However, our focus for this article was specifically on compact SFF builds. The A4500, while powerful, is a full-sized card, making it less suitable for the small form factor systems we were targeting.

For a home user building a regular desktop system, the RTX A4500 doesn’t quite make sense when compared to the RTX 3090. The A4500, being a professional-grade card, comes with a significantly higher price tag. When you factor in that the RTX 3090 generally outperforms it in raw speed, the choice becomes even clearer. If you’re building a standard desktop PC and not confined by size constraints, the RTX 3090 gives you more bang for your buck. It’s a much better fit for a powerful, non-SFF home build.

gm

I was inspired to repeat your success.

I am trying to install an A4000 Ada SFF on my Optiplex 3070, and I am unfortunately having the hardest time getting the A4000 Windows 11 drivers to load.

Are there any particular settings in BIOS that you were able to set to get yours working?

Any hints you may have to getting the nVidia drivers installed correctly?

Thank you!

Could you tell me if your OptiPlex 3070 is the larger Tower (MT) or the slim Small Form Factor (SFF) model?

Beyond that, the most important first step is to update your computer’s BIOS to the latest version from Dell’s support site.

You should also try going into the BIOS settings and temporarily disabling the “Secure Boot” option.

Finally, for the driver itself, use a tool like Display Driver Uninstaller (DDU) to completely wipe any old versions before trying to install the new one.

Appreciate your thoughts, Allan.

I am using a 3070 SFF (about 3L), and have the (PNY) A4000 SFF Ada connected to a PCIe4.0 x16 ribbon open-case (the factory PSU blocks 2-slot cards).

CPU is i5-9XXX, and (I believe) this MB’s x16 is PCIe-3.0

I finally updated to the latest BIOS this morning (1.32.0 IIRC).

The fan comes-on, but the card gets really hot.

I have the BIOS-GPU set to Auto, as setting the A4000 as the Default produces no screens.

Win11 registers the A4000, but no matter which driver I install, I still get Error 43.

If I remember correctly, you used a Intel 10XXX CPU-enabled unit . . . could the 9-Series ultimately be the cutoff?

I have read hundreds of “This doesn’t work!” posts on the Interwebs, and many of the suggestions as to why we (I!) get Error 43 is related to “CPU”.

I’m not really concerned, directly, with ultimately getting this GPU to work with just this Optiplex 3070; what I would really like to do is determine if I can actually use such a card in another (newer) system without wasting $ on the purchase of the additional hardware ($ purchases which are not in-significant).

Regards, splifingate

3070 SFF; 9-Series processor.

IIRC, I have updated to the latest bios (1.32.x), and have vid-card selection at Multi-Screen, and Auto.

I have read a thousand posts in teh Interwebs about how to deal with Error 43, and–no matter how many times I install, clean, re-install, DDU, etc. the Drivers–I eventually presented with the yellow-exclamatory triangle in Device Manager *grr*

This is a NIB PNY A4000 SFF, and (though I cannot truly tell), I’m pretty-sure that I am the first person to power-on this very card.

I understand that the Optiplex 3070 is PCIe 3.0–and that it is not really designed for such a card–but (all things being equal), it should at-least post the card, and allow me to install the drivers.

Obviously, I’m not the only one out there generally having difficulty installing the nVidia Drivers, but it’s definitely possible (hence all the success I have seen).

I just don’t want to invest all the $ it takes to step-up to newer hardware (which is my current goal) if I will face the same challenges.

I really appreciate your time and thoughts on the matter.

One thing I can think of is maybe the power – the system might not be supplying enough for all the components. When a GPU like the RTX 4000 Ada isn’t getting stable or sufficient power, it can heat up quickly and fail to initialize properly, which may results in errors.

I don’t have the system I tested on in front of me, but I’m fairly certain it was using a 500W PSU, something like the H500EPM-00 model. The 200W PSU in the 3070 SFF may simply not be enough to support a sustained 70W GPU load, especially through a riser. I’ll double-check when I get a chance, but power delivery is definitely something I’d look closely at here.

One other thing you could try, at your own discretion, is removing the PSU from the case temporarily to free up space for the GPU and test it directly in the PCIe slot without the riser cable.