Maximize Efficiency in LLM Training: New Software Offers 80% Speed Increase with 50% Less Memory

The new AI startup Unsloth has unveiled its latest product, targeted toward the field of Large Language Model (LLM) training. Their flagship software, promises an astounding 30x faster training speed for LLMs, with a substantial 60% reduction in memory usage, and no compromise on accuracy. This method is set to transform how AI models are trained, offering a more efficient and accessible approach for local LLM developers and researchers alike.

AI Training

Unsloth achieves these remarkable feats by implementing several innovative techniques. Key among these is the manual autograd engine, which entails hand-derived backpropagation steps, significantly optimizing the training process. Additionally, the use of QLoRA/LoRA results in an 80% faster training experience and 50% less memory usage. These kernels are all written in OpenAI’s Triton language, ensuring precision and efficiency.

The 50% reduction in memory or VRAM usage is specifically attributed to the implementation of QLoRA/LoRA, while the overall reduction observed during full finetuning with this method is slightly lower, estimated around a 30% decrease.

Currently the package supports only LLama based models, but there are plans for Mistral support in a new future.

Compatibility and Accessibility

One of the most compelling aspects of Unsloth is its compatibility with a wide range of hardware. It requires no new hardware investments, supporting NVIDIA GPUs from 2018 onwards, including popular models like Tesla T4, RTX 20, 30, 40 series, A100, and H100s. This backward compatibility ensures that a broader range of users can access and benefit from these advancements without the need for additional hardware expenditures.

Impressive Performance Metrics

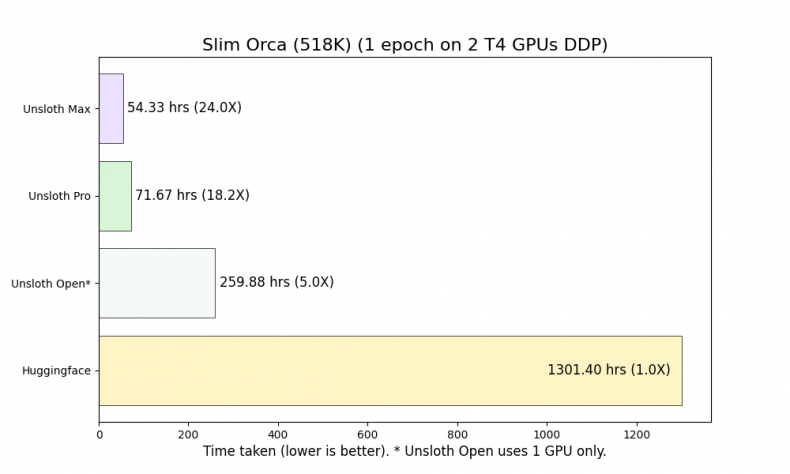

The performance improvements with Unsloth are not just theoretical. In practical applications, such as training the Alpaca dataset on a Tesla T4 GPU, Unsloth’s Max offering reduces the time from 23 hours to just over 2 hours. This remarkable efficiency is replicated across various datasets and GPU configurations, consistently demonstrating significant time and memory savings.

Open Source

In a move to support the broader AI community, Unsloth has released an open-source version of their software. This version still offers substantial improvements in training speed and memory usage, making it accessible for a wider audience, including individual developers and smaller organizations.

Future Plans and Community Engagement

Looking ahead, Unsloth plans to extend its capabilities to more hardware platforms, including Intel and AMD GPUs. They are also exploring options like sqrt gradient checkpointing to further reduce memory usage. In addition to these technical developments, Unsloth is actively seeking community engagement and partnerships to expand its reach and impact.