Meta Releases Llama 4: Here’s the Hardware You’ll Need to Run It Yourself

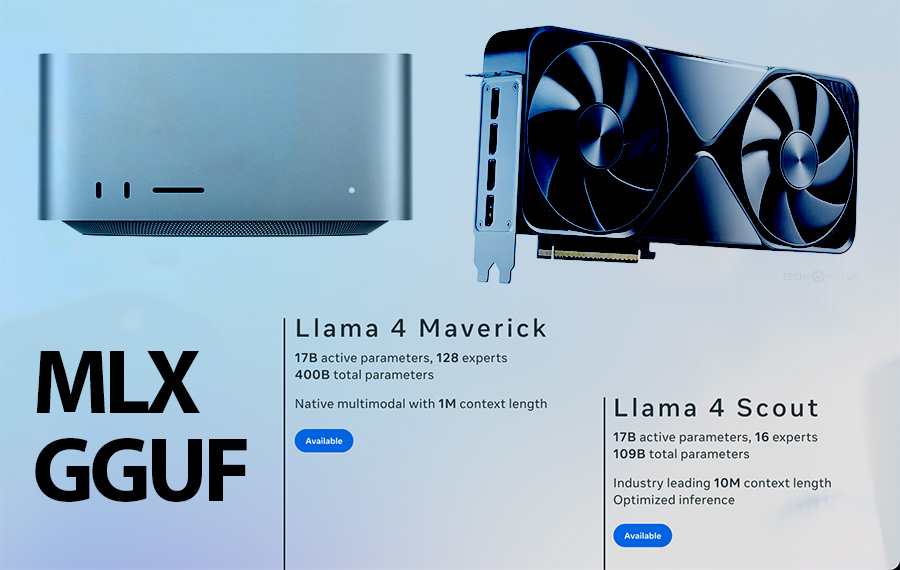

Meta has just released Llama 4, the latest generation of its open large language model family – and this time, they’re swinging for the fences. With two variants – Llama 4 Scout and Llama 4 Maverick – Meta is introducing a model architecture based on Mixture of Experts (MoE) and support for extremely long context windows (up to 10 million tokens). That’s exciting not just for enterprise deployments, but also for the local AI enthusiast crowd.

If you’re in the business of building your own rig to run quantized LLMs locally, you’re probably wondering: can I actually run Llama 4 at home? The short answer is: yes, with the right hardware – and a lot of memory.

Below, we’ll break down what you need for each model, using both MLX (Apple Silicon) and GGUF (Apple Silicon/PC) backends, with a focus on performance-per-dollar, memory constraints, and hardware availability for price-conscious builders.

Understanding Llama 4’s Architecture

Before we get into specs, here’s what makes Llama 4 different:

Llama 4 Scout

- Model Size: 17B active parameters × 16 experts (109B total)

- Context Window: 10 million tokens

- Implication: Moderate VRAM for model loading, but massive memory demand if you’re utilizing full context length.

Llama 4 Maverick

- Model Size: 17B active × 128 experts (400B total)

- Context Window: 1 million tokens

- Implication: Larger model footprint, but only a subset of parameters active at a time – fast inference, but heavy load times and large memory requirements.

Llama 4 Scout: Hardware Requirements

MLX (Apple Silicon) – Unified Memory Requirements

These numbers are based on model load, not full-context inference. Longer context will scale memory use significantly.

| Quantization | Unified Memory Needed | Recommended Apple Systems |

|---|---|---|

| 3-bit | 48 GB | M4 Pro, M1/M2/M3/M4 Max, M1/M2/M3 Ultra |

| 4-bit | 61 GB | M2/M3/M4 Max, M1/M2/M3 Ultra |

| 6-bit | 88 GB | M2/M3/M4 Max, M1/M2/M3 Ultra |

| 8-bit | 115 GB | M4 Max, M1/M2/M3 Ultra |

| fp16 | 216 GB | M3 Ultra |

GGUF – PC/Server RAM & VRAM Requirements

| Quantization | RAM/VRAM Needed | Recommended Systems |

|---|---|---|

| Q3_K_M | 55 GB | 3×24GB GPUs (e.g., 3090s), 2xRTX 5090, 64GB RAM, Ryzen AI Max Plus, DGX Spark w/ 64GB |

| Q4_K_M | 68 GB | RTX PRO 6000, 3×24GB GPUs, 96GB RAM, Ryzen AI Max Plus or DGX Spark with 128GB memory |

| Q6_K | 90 GB | 3×RTX 5090, 128GB RAM, Ryzen AI Max Plus / DGX Spark |

| Q8_0 | 114 GB | 2xRTX PRO 6000, 4×32GB GPUs, 128GB RAM |

Llama 4 Maverick: Hardware Requirements

This one is beefy. While only 17B active parameters are used per token, loading the full 400B parameter MoE architecture pushes memory limits hard – especially at higher precision.

MLX (Apple Silicon)

| Quantization | Unified Memory Needed | Recommended Apple Systems |

|---|---|---|

| 4-bit | 226 GB | M3 Ultra (config w/ 256GB+) |

| 6-bit | 326 GB | M3 Ultra (512GB only) |

GGUF – PC/Server RAM & VRAM Requirements

| Quantization | RAM/VRAM Needed | Recommended Systems |

|---|---|---|

| Q3_K_M | 192 GB | 3x96GB RTX PRO 6000, 7×32GB GPUs (A6000/3090 mix), 256GB RAM server |

| Q4_K_M | 245 GB | 8×32GB GPUs or more, 320GB RAM, dual CPU workstation with 16+ memory channels |

| Q6_K | 329 GB | 4x96GB RTX PRO 6000, Server with 384GB RAM |

| Q8_0 | 400 GB | 512GB RAM server |

CPU Inference?

Due to the MoE structure in Llama 4 – especially in the Scout variant – running the model entirely on CPU is a viable option, provided the system has sufficient memory capacity and bandwidth. While not optimal for low-latency tasks, this setup can be effective for throughput-focused workloads on high-memory desktop or server-class systems.

If you have a DDR5 platform with high bandwidth, this could be a surprisingly viable path – especially for future systems with recently announced DDR-8000 and DDR-9000 memory modules. .

Looking Ahead

Ryzen AI Max+ and NVIDIA DGX Spark offering unified memory pools up to 128GB, which could significantly lower the barrier to running Scout-class models locally.

Final Thoughts

Meta’s Llama 4 pushes the boundary of what’s possible on local systems – but it doesn’t shut the door to DIY enthusiasts. Thanks to its Mixture of Experts architecture, you can run enormous models – as long as you’re willing to invest in the memory footprint.

The performance-per-dollar curve still favors older, high-VRAM GPUs, and with some clever hardware choices, you can absolutely bring Llama 4 to your local stack.

* The article was updated on April 7, 2025 (PDT) to represent proper GGUF quantized file sizes.