96GB VRAM LLM PC Build Under $10K? RTX Pro 6000 vs. Dual 48GB 4090-Which Wins?

As AI models grow larger and more demanding, the need for high-VRAM GPUs has never been greater. Running a 70B parameter model like Llama 3.3 with a large context (Llama 3.3 has 130k context) requires lots of VRAM in a 4-bit quantized setup. While NVIDIA’s newly announced RTX Pro 6000 offers a straightforward 96GB VRAM solution, its $8,565 price tag places it firmly in the professional segment. Meanwhile, a new wave of modified RTX 4090 from China – offering 48GB per card – has emerged as a potential alternative, making a dual-GPU setup an enticing option while still keeping the build simple with an affordable motherboard and a single PSU.

NVIDIA’s recent reveal of the Blackwell-based RTX Pro 6000 pricing has sent ripples through the professional and enthusiast AI communities. At a seemingly reasonable $8565, the card boasts a staggering 96GB of VRAM with a blistering 1.8 TB/s of bandwidth. On paper, this positions it as a potential powerhouse for local LLM inference and fine-tuning.

More to read: First Teardown: 48GB RTX 4090 Mod RUNS 70B LLMs Flawlessly

But then, a shadow looms from the East. Reports are surfacing of modified NVIDIA GeForce RTX 4090 cards in China, boasting 48GB of GDDR6X memory, and selling for around $3400. Double that up – a fairly straightforward proposition given the prevalence of dual-GPU setups – and you have a 96GB behemoth for a significantly lower price point, almost $2000 in savings.

A Closer Look at the Contenders

The RTX Pro 6000, available in both a 600W flow-through design and a 300W blower version (both at the same $8565 price point), is clearly aimed at workstation and server deployments. The 600W variant, with its higher clock speeds, promises peak performance, while the 300W version trades some raw power for thermal efficiency, crucial for dense, multi-GPU installations. With 96 GB at 1.8 TB/s, it can inference a 70B model at bit 8 bit quantization with spare memory for large context window.

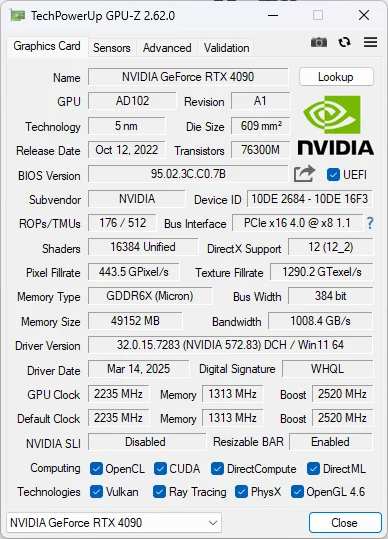

The Chinese modified RTX 4090, on the other hand, presents a more… unconventional approach. These cards, typically found on Chinese second-hand markets, feature a custom water-cooling solution (often a 360mm AIO) and report impressive thermal performance, with full-load core temperatures hovering around 49°C. Crucially, they claim to use a standard NVIDIA driver, alleviating some concerns about stability and future compatibility, though, of course, warranty support is highly suspect. The 360 integrated water-cooled cards offer silent and high-frequency performance. It’s default frequency performance improved by 8.7% to solve the noise and temperature problems compared to 4090 48G turbo version,

VRAM vs. Everything Else

For many, the allure of abundant VRAM is undeniable. Local LLM inference thrives on it, allowing for larger models and longer context lengths. The simple rule of thumb – dividing memory bandwidth by model size to estimate tokens per second – highlights the importance of both capacity and speed. A 70B parameter model, quantized to 4 bits per weight (approximately 43GB), would theoretically achieve around 25 tokens/second on the RTX Pro 6000.

However, raw VRAM capacity isn’t the only factor. The RTX Pro 6000 brings enterprise-grade features to the table: ECC memory, professional drivers, and vGPU support. These are critical for businesses and researchers demanding stability and reliability. The modified 4090s, lacking these features, are clearly positioned for the more adventurous, budget-conscious enthusiast.

Performance and Reliability

While the Chinese 4090 modifications promise impressive specifications, several questions remain. The long-term reliability of these modified cards is a significant unknown. Furthermore, while they claim to work with standard NVIDIA drivers, some users report slightly slower performance compared to a stock 4090. This could be due to a variety of factors, including variations in the quality of the modifications and potential BIOS limitations. Also, the modded 4090 is fairly loud.

The RTX Pro 6000, on the other hand, benefits from NVIDIA’s rigorous testing and validation. It’s a known quantity, designed for sustained, high-load operation. The choice between the two ultimately boils down to a risk/reward assessment.

The Verdict

NVIDIA’s pricing of the RTX Pro 6000 is, surprisingly, competitive. The RTX Pro 6000 is the most value for your money, compared to other Pro cards. It’s forcing prices of used A6000 and even 3090 cards to drop, a welcome development for the community. However, the emergence of the Chinese 48GB RTX 4090 modifications throws a wrench into the established order.

For those prioritizing raw VRAM capacity and willing to accept some risk, the dual-4090 setup presents a compelling, significantly cheaper alternative to the RTX Pro 6000. It’s a clear win for cost-conscious enthusiasts, particularly those focused on inference rather than training. It’s basically half of A6000 Ada, so this is a 4090 having same VRAM but more bandwidth and more performance.

However, for professionals and businesses where stability and support are paramount, the RTX Pro 6000 remains the safer, albeit more expensive, bet. The added peace of mind, enterprise features, and guaranteed performance are likely worth the premium for many.

Yeah, a MacBook Pro with M2 Max and 96GB or Mac Studio M1 Ultra 128 GB/1TB is an option. You can find second-hand or refurbished for around $2,500–$3,000. But Macs have pretty slow prompt processing speeds, so that could be an issue if you're working with large context sizes.

How about something with Apple Silicon? I think the M2 Max had a 96GB version - might be an option too.

A four-GPU build is not straightforward to set up and, at the moment, isn’t the cheaper. It requires two PSUs, whereas a single or dual-GPU setup can run on just one high-powered PSU. Additionally, second-hand RTX 3090s are no longer $700 - due to ongoing GPU shortages, prices have risen to between $850 and $1,000.

4x RTX 3090 is cheaper 96 GB VRAM approach. As second hand they cost $700.