How Fast is Mac Studio M3 Ultra Running the New DeepSeek V3 LLM?

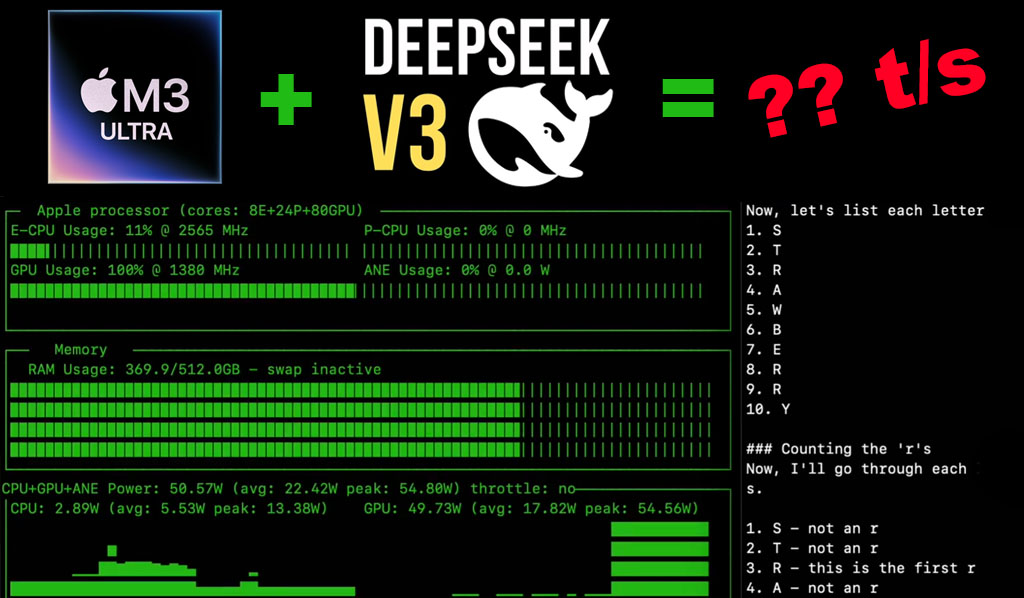

DeepSeek V3 checkpoint (v3-0324) was just released, and we now have the first benchmarks for Apple’s Mac Studio M3 Ultra surfacing online. While most mainstream publications focus on token generation speeds, real-world workloads often involve large context sizes. We know that Apple Silicon chips are great for running LLMs – but let’s see how the M3 Ultra handles this massive 671B model.

Benchmarking the M3 Ultra with DeepSeek V3 0324 in MLX

The benchmarks were conducted using MLX and DeepSeek-V3-0324 in 4-bit quantization on a Mac Studio with an M3 Ultra (80 GPU cores, 512GB unified memory, 800GB/s bandwidth). At 16K tokens, memory usage peaked at 466GB, demonstrating Apple’s advantage in handling large models locally.

The new Deep Seek V3 0324 in 4-bit runs at > 20 toks/sec on a 512GB M3 Ultra with mlx-lm! pic.twitter.com/wFVrFCxGS6

— Awni Hannun (@awnihannun) March 24, 2025

Performance Breakdown

| Context Size | Prompt Processing Speed | Generation Speed |

| 69 tokens | 58.08 tokens/sec (1.19s) | 21.05 tokens/sec |

| 1145 tokens | 82.48 tokens/sec (13.89s) | 17.81 tokens/sec |

| 15777 tokens | 69.45 tokens/sec (227s) | 5.79 tokens/sec |

Most media reports cite a token generation rate of ~21 tokens/sec, but this only applies to small context sizes. As context grows, token generation speed declines significantly. At 16K tokens, performance drops off due to computational constraints – this is where NVIDIA GPUs still shine. However, Apple’s unified memory architecture enables fast processing of long prompts, allowing the system to apply model parameters to multiple tokens simultaneously (e.g., 512 tokens at once). At that point, memory bandwidth becomes less of a bottleneck, and computational power becomes the limiting factor.

How Does DeepSeek V3 0324 Compare to DeepSeek R1?

DeepSeek V3 runs slightly faster than R1, but both models are relatively efficient given their Mixture of Experts (MoE) architecture. Unlike monolithic models, MoE architectures selectively activate only a fraction of the model per token, significantly reducing computational load and improving efficiency.

DeepSeek R1 Benchmarks on M3 Ultra (MLX, 4-bit Quantization)

| Context Size | Prompt Processing Speed | Generation Speed |

| 1K tokens | 75.92 tokens/sec | 16.83 tokens/sec |

| 13K tokens | 58.56 tokens/sec | 6.15 tokens/sec |

While DeepSeek V3 is slightly faster, both models perform well relative to their size. However, practical usage at large context sizes (e.g., 16K – 32K) will introduce serious slowdowns.

How Does Mac Studio M3 Ultra Compare to NVIDIA GPUs?

Running DeepSeek V3 0324 locally on an NVIDIA-based setup requires an enormous amount of VRAM. To fit just the model itself in VRAM, you’d need 17 GPUs with 24GB VRAM each—and that’s before considering context size. Additional GPUs would be required for storing and processing long prompts, making this setup infeasible for home users.

Here’s what a comparable NVIDIA setup would look like:

- 17 x RTX 3090 (24GB VRAM each) → $13,000 (second-hand market)

- Power Requirements: ~3.7 kW (assuming 250W per underclocked 3090)

- Cooling & Infrastructure: High Complexity

Even using 8 workstation-grade NVIDIA GPUs with 48GB VRAM (e.g., A6000) would be cost-prohibitive, with each card costing around $5,000 used.

Apple’s Efficiency Advantage

- Mac Studio M3 Ultra (512GB unified memory) → $10,000

- Power Draw: 400W (peak)

- No additional cooling or infrastructure required

For running massive LLMs in a home environment, Apple’s Mac Studio M3 Ultra is the best practical option. Yes, $10,000 is a steep price, but it’s still more accessible than assembling a multi-GPU NVIDIA system that would cost significantly more in hardware, power, and cooling.

Final Verdict

If you need to run large-scale LLMs locally and work with high-context prompts, Mac Studio M3 Ultra provides the most efficient and practical solution. While it can’t match NVIDIA’s sheer compute power, its massive unified memory allows it to handle workloads that would otherwise require an impractical multi-GPU setup. For AI enthusiasts looking to run 670B+ parameter models with real-world context sizes, this is the best option available today.