Running Local LLMs? This 32GB Card Might Be Better Than Your RTX 5090—If You Can Handle the Trade-Offs

Tenstorrent, the AI and RISC-V compute company helmed by industry veteran Jim Keller, has officially opened pre-orders for its new lineup of Blackhole and Wormhole PCIe add-in cards. Aimed squarely at developers and, potentially, the burgeoning local Large Language Model (LLM) enthusiast market, these cards offer a new alternative in a space largely dominated by Nvidia.

With VRAM capacities breaching the 24GB ceiling common on consumer GPUs, Tenstorrent is making a bid for users running increasingly large models locally. But the critical question for the DIY AI community remains: do these cards make sense from a VRAM, bandwidth, and price perspective compared to established Nvidia solutions?

The rise of powerful open-weight LLMs like Llama, Qwen, Mixtral, and QwQ has fueled a demand for capable hardware outside of expensive cloud instances or enterprise data centers. Enthusiasts building custom rigs prioritize VRAM capacity to fit larger models and memory bandwidth for acceptable inference speeds (tokens per second), often turning to multi-GPU setups with used Nvidia cards like the venerable RTX 3090. Tenstorrent’s new offerings, particularly the actively cooled desktop variants, step into this highly competitive, price-sensitive arena.

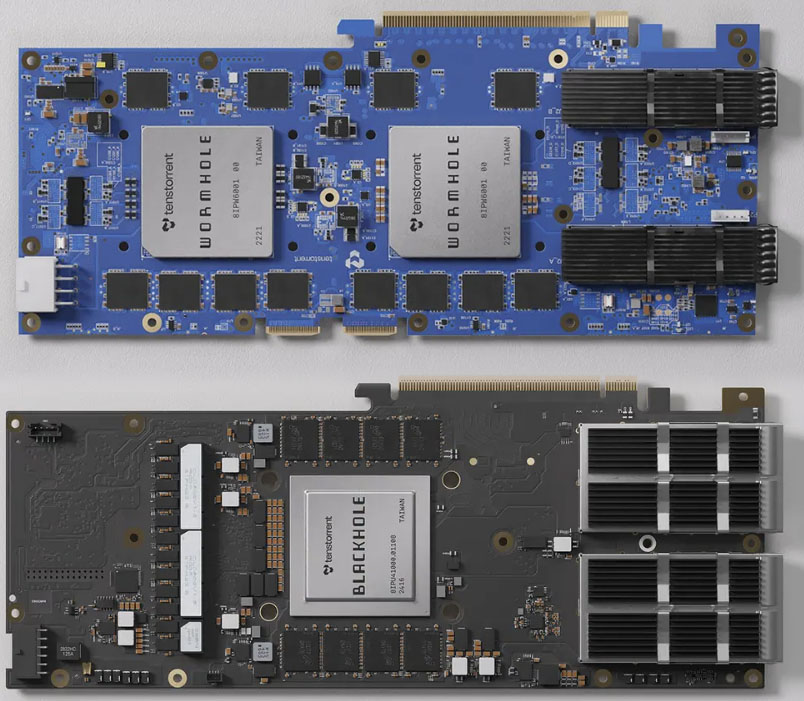

Blackhole and Wormhole Models

Tenstorrent has released several SKUs, but for desktop workstation users requiring active cooling, the focus falls on four specific models available for pre-order:

-

Blackhole™ p100a: Features 120 Tensix cores, 16 “Big RISC-V” cores, 180MB SRAM, 28GB GDDR6 memory running at 16 GT/sec for 448 GB/s bandwidth. TBP is 300W. Priced at $999.

-

Blackhole™ p150a: Steps up to 140 Tensix cores, 16 “Big RISC-V” cores, 210MB SRAM, 32GB GDDR6 memory at 16 GT/sec delivering 512 GB/s bandwidth. Includes 4x QSFP-DD 800G ports for high-speed interconnects. TBP is 300W. Priced at $1399.

-

Wormhole™ n150d: Built on the previous generation Wormhole ASIC, it offers 72 Tensix cores, 108MB SRAM, 12GB GDDR6 at 12 GT/sec for 288 GB/s bandwidth. TBP is 160W. Priced at $1099. (Note: Lower VRAM/BW than the cheaper p100a). Includes 2x QSFP-DD 200G ports.

-

Wormhole™ n300d: Utilizes two Wormhole ASICs for a total of 128 Tensix cores, 192MB SRAM, 24GB GDDR6 at 12 GT/sec providing 576 GB/s bandwidth. TBP is 300W. Priced at $1449. Includes 2x QSFP-DD 200G ports.

Tenstorrent emphasizes its open-source approach, providing access down to the metal and offering a custom fork of the popular vLLM inference server, supporting models like Llama, Qwen, Mistral, Mixtral, and Falcon. Documentation and guides are available on their developer site.

VRAM vs. Bandwidth Trade-offs

For local LLM inference, especially token generation, memory bandwidth is king. VRAM determines if a model fits, but bandwidth dictates how fast it runs. Let’s see how Tenstorrent’s cards stack up against Nvidia’s relevant offerings, considering both MSRP and estimated current market prices (as of April 2025):

Comparison Table: Tenstorrent Blackhole vs. Nvidia RTX

| Feature | Tenstorrent p100a | Tenstorrent p150a | Nvidia RTX 3090 (Used) | Nvidia RTX 4090 | Nvidia RTX 5090 |

| VRAM | 28 GB GDDR6 | 32 GB GDDR6 | 24 GB GDDR6X | 24 GB GDDR6X | 32 GB GDDR7 |

| Bandwidth | 448 GB/s | 512 GB/s | 936 GB/s | 1008 GB/s | 1790 GB/s |

| Power (TBP) | 300W | 300W | 350W | 450W | 575W |

| Price (Current) | $999 | $1399 | $1000 | ~$3000 | ~$3300 |

| Price (MSRP) | $999 | $1399 | $1499 | $1599 | $1999 |

| $/GB VRAM (Current) | ~$35.68 | ~$43.72 | ~$41.67 | ~$125.00 | ~$103.13 |

| $/(GB/s) BW (Current) | ~$2.23 | ~$2.73 | ~$1.07 | ~$2.98 | ~$1.84 |

| Connectivity | PCIe | PCIe | PCIe | PCIe | PCIe |

Comparison Table: Tenstorrent Wormhole vs. Nvidia RTX

| Feature | Tenstorrent n150d | Tenstorrent n300d | Nvidia RTX 3090 (Used) | Nvidia RTX 4090 |

| VRAM | 12 GB GDDR6 | 24 GB GDDR6 | 24 GB GDDR6X | 24 GB GDDR6X |

| Bandwidth | 288 GB/s | 576 GB/s | 936 GB/s | 1008 GB/s |

| Power (TBP) | 160W | 300W | ~350W | ~450W |

| Price (Current) | $1099 | $1449 | ~$1000 | ~$3000 |

| Price (MSRP) | $1099 | $1449 | $1499 | $1599 |

| $/GB VRAM (Current) | ~$91.58 | ~$60.38 | ~$41.67 | ~$125.00 |

| $/(GB/s) BW (Current) | ~$3.82 | ~$2.52 | ~$1.07 | ~$2.98 |

| Connectivity | PCIe | PCIe | PCIe | PCIe |

Analysis: A Mixed Bag for Enthusiasts

The tables reveal a clear narrative echoed in online enthusiast forums:

VRAM Advantage (Blackhole)

The Blackhole p150a’s 32GB and p100a’s 28GB VRAM are compelling, exceeding the 24GB limit of the RTX 3090 and 4090. This allows fitting larger models or using higher precision without resorting to multi-GPU setups immediately. The p150a matches the anticipated VRAM of the much more expensive RTX 5090. From a pure $/GB perspective, the Blackhole cards, especially the p100a, look attractive against current Nvidia pricing (though the used 3090 remains competitive).

Bandwidth Deficit

This is the Achilles’ heel for LLM inference. Both Blackhole cards offer roughly half the memory bandwidth of a used RTX 3090 (~$1000). The Wormhole n300d offers slightly more bandwidth than the p150a but still lags significantly behind the 3090. While some argue high compute (Tenstorrent claims high TFLOPS, potentially beneficial for prompt processing) can matter, token generation speed is heavily correlated with bandwidth. Expect lower tokens/second compared to a 3090/4090 for models that fit on both.

Price Point

At $999 and $1399 respectively, the p100a and p150a are priced against the used RTX 3090 market and dip into the original MSRP territory of the 3090/4090. The n300d at $1449 directly competes with the 3090’s original MSRP. While offering more VRAM (in Blackhole’s case), the bandwidth trade-off at this price is substantial. Many enthusiasts feel the bandwidth offered is too low for a 2025 product at these prices, with comparisons drawn to much older hardware like the Tesla P100 (which had >700 GB/s in 2016, albeit at a vastly higher launch price).

Connectivity & Scaling

Here lies a potential unique advantage. The QSFP-DD ports on the p150a (800G) and n300d/n150d (200G) offer high-speed interconnects designed for multi-card scaling, potentially surpassing standard PCIe bandwidth limitations in large clusters. Tenstorrent’s focus on tensor parallelism aims to leverage this. While NVLink exists for Nvidia, it’s often expensive or limited on consumer cards. For users planning large, multi-card setups, this could be a differentiator, assuming the software stack effectively utilizes it to achieve high aggregate bandwidth.

Software Ecosystem

This remains a significant unknown and potential hurdle. While Tenstorrent provides a vLLM fork and open drivers, it lacks the maturity, extensive tooling, and broad community support of Nvidia’s CUDA. Enthusiasts adopting these cards will be early adopters, likely facing a learning curve and potential troubleshooting compared to the well-trodden Nvidia path using popular front-ends like Oobabooga or LM Studio.

Who Are These Cards For?

Based on the specs and community feedback, these cards aren’t a straightforward “3090 killer” for the average local LLM user primarily focused on maximizing token/second performance for a single-card setup. The bandwidth limitation is too significant.

However, they present an interesting proposition for:

-

Users Prioritizing VRAM >24GB: If fitting a specific model (e.g., a 30B parameter model at higher precision, or future larger models) requires more than 24GB VRAM in a single slot and Nvidia’s pro-level cards (like the A6000 or RTX 6000 Ada) are too expensive, the Blackhole p150a ($1399 for 32GB) becomes a unique option.

-

Developers & Researchers: The open-source nature and direct hardware access appeal to those wanting to experiment with novel AI architectures or avoid vendor lock-in.

-

Scalability Planners: Users intending to build multi-card systems might find the high-speed interconnects on the p150a particularly attractive for efficient tensor parallelism, potentially achieving competitive performance at scale compared to multiple Nvidia cards communicating over PCIe.

-

Early Adopters & Tinkerers: Enthusiasts excited by Jim Keller’s pedigree and willing to invest time in a nascent software ecosystem might jump in.

Looking Ahead: The Promise of the P300

Perhaps the most exciting prospect, frequently mentioned by informed community members citing Tenstorrent’s Dev Day, is the upcoming Blackhole p300. This anticipated card is rumored to feature two Blackhole chips on a single PCB, offering 64GB of VRAM and ~1TB/s total bandwidth (2x 512 GB/s channels with fast interconnect). If priced competitively, this card could be a genuine game-changer, directly addressing the bandwidth concerns while offering substantial VRAM, potentially making it highly competitive against even high-end Nvidia offerings for local LLMs.

Conclusion

Tenstorrent’s entry into the enthusiast-accessible PCIe market with the Blackhole and Wormhole cards is a welcome sign of competition. The Blackhole cards, particularly the p150a, break the 24GB consumer VRAM barrier at a sub-$1500 price point, a significant achievement. However, for typical local LLM inference workloads sensitive to token generation speed, the significantly lower memory bandwidth compared to price-competitive Nvidia options (especially the ubiquitous used RTX 3090) is a major drawback. The Wormhole cards appear less competitive for this specific use case based purely on VRAM and bandwidth per dollar.

The integrated high-speed networking and the promise of the upcoming 64GB/1TBps p300 card suggest Tenstorrent is playing a longer game focused on scalability and larger memory pools. For now, these pre-orderable cards represent an intriguing, albeit niche, alternative for enthusiasts whose needs align specifically with higher single-card VRAM or multi-card scaling potential, and who are willing to navigate a less mature software ecosystem. The broader local LLM community will likely watch closely, perhaps waiting for real-world benchmarks and the arrival of the potentially more balanced p300.